Project Title

Real-time Coherent Video Style Transfer

Abstract

With the increasing popularity of deep learning and computer vision, the field of image style transfer using convolutional neural networks has gained interests of researchers globally. Although there have been mature solutions for style transfer on images, they usually suffer from high temporal inconsistency when applied to videos. Some video style transfer models have recently been proposed to improve temporal consistency, yet they fail to guarantee fast processing speed, nice perceptual style quality and high temporal consistency at the same time. In this project, we propose a novel real-time video style transfer model, ReCoNet, as a solution to this problem. ReCoNet is a feed-forward convolutional neural network which can generate temporally coherent style transfer videos in real-time speed. Experimental results indicate that ReCoNet exhibits outstanding performance both qualitatively and quantitatively.

Network Architecture

Since the project is research-based and confidential before publication, we cannot upload the network architecture at this stage. Please contact the supervisor for further issues.

Loss Functions

Since the project is research-based and confidential before publication, we cannot upload the loss functions at this stage. Please contact the supervisor for further issues.

Experiment Results

- Fast inference speed: As fast as 235 FPS on GTX 1080Ti GPU

- High temporal coherence: Low scene-wise temporal error

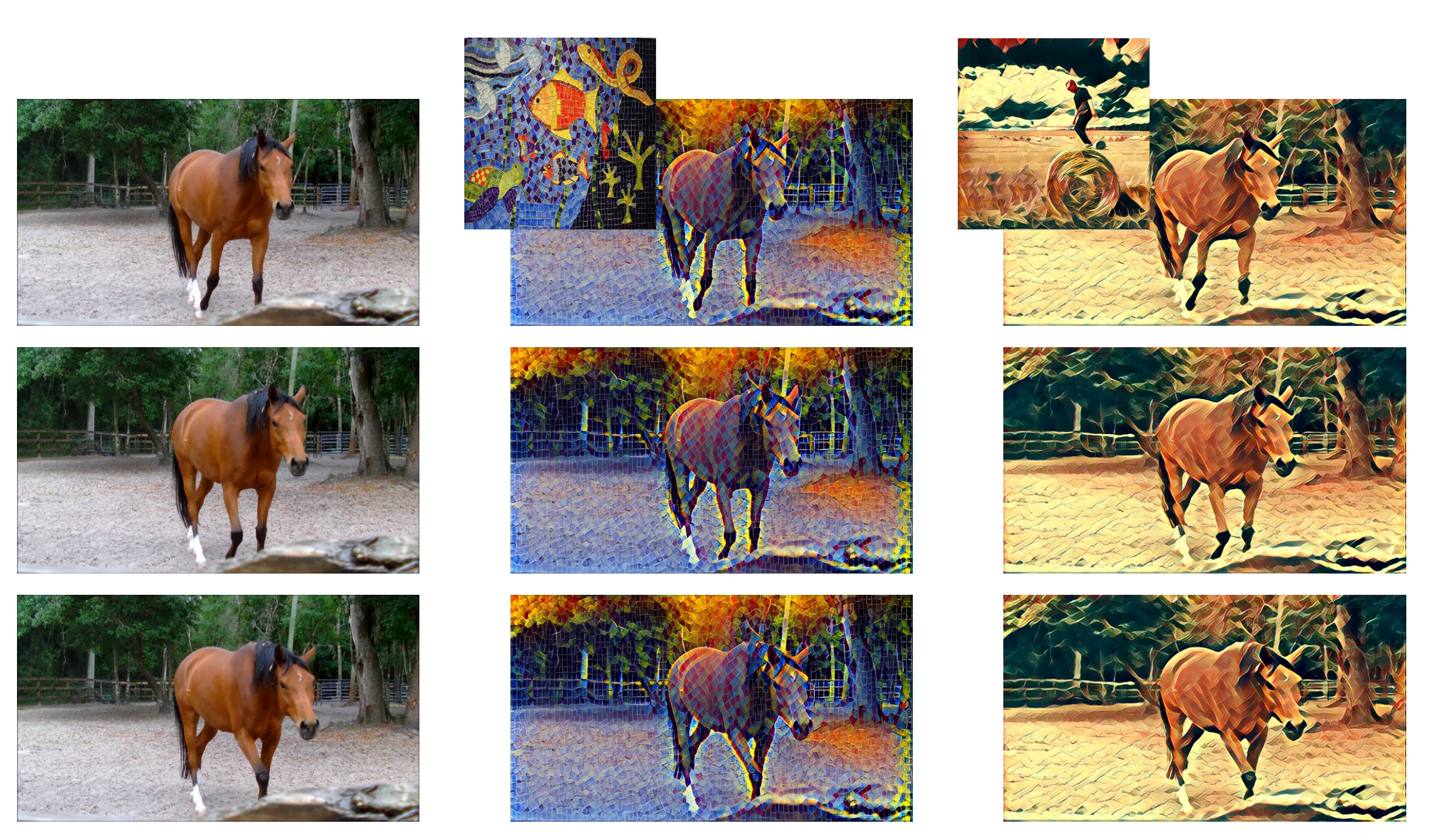

- Nice perceptual style:

Streaming Application

A demonstrative application has also been developed to perform video style transfer on devices with a modern GPU and a web-cam. The application can display both the actual video streamed by the web camera and its stylization result at the same time, providing multiple transformation styles to select.

Conclusion

In this project, a novel feed-forward convolutional neural network ReCoNet is proposed for video style transfer. ReCoNet is able to generate temporally coherent stylized videos in real-time processing speed while maintaining artistic styles perceptually similar to the style targets. A streaming stylization application using ReCoNet has also been developed, which can display both the streaming video captured by one web camera and its stylization result at the same time, with multiple styles supported. In future work, it deserves more investigations on using smaller networks for coherent style transfer in real-time or near real-time speed on mobile devices without GPU.