Issues addressed by MultiVision

|

|

Technologies to be developed

|

|

In this project, the following technologies will be developed

|

- Semi-semantic Video Processing for Video Indexing

This technology is related to the online extraction of contents and activities

descriptors in the proposed retrieval systems. We shall investigate a number of

possible features that could be efficiently extracted and indexed for retrieval

purpose in this part. The motion detection developed in the project UIM/118 can

serve as a good starting point for us because it can give us some primitive

information about the object behavior and movement. To achieve the best

performance, we aim at developing new technologies that could extract

additional informative descriptors for the detected objects (such as shape, color,

texture, etc.) for indexing in real-time.

- Video Indexing Scheme for Efficient Video Retrieval

This technology is related to the video indexing scheme based on the

semi-semantic descriptors. This part takes the semi-semantic descriptors as

input to index the corresponding videos. With good indexing scheme from this

technology, the videos of interest can be efficiently located and retrieved.

- Conceptual Query to Semantic Description Translation

This technology is related to the translation of the user specified conceptual

query to semi-semantic descriptions that will be used to match against those

generated from semi-semantic video processing. This part serves as an interface

between the user and retrieval systems, so that the users can easily specify their

retrieval criteria.

- Video Data Caching for Reliable Hard Disks Operations

This technology is related to the caching mechanism for video data which could

reduce the frequency of hard disks read-write operations and fragmentation

problems.

|

Back to top

|

Innovative use of existing technologies

|

This project will also use existing technologies in semi-semantic video analysis

innovatively to perform semi-semantic descriptors generation in the proposed retrieval

system. The research work involved in this aspect includes extensive study and trial of

large combinations of different video analysis tools for achieving the best performance in

real-time.

An immediate innovative use of the four newly developed technologies listed in (I)

together with the existing technologies is the realization of a reliable digital video

surveillance system with efficient content-based video retrieval support. The example in

(III) illustrates how video retrieval application can be implemented.

|

|

Back to top

|

Example retrieval application

|

|

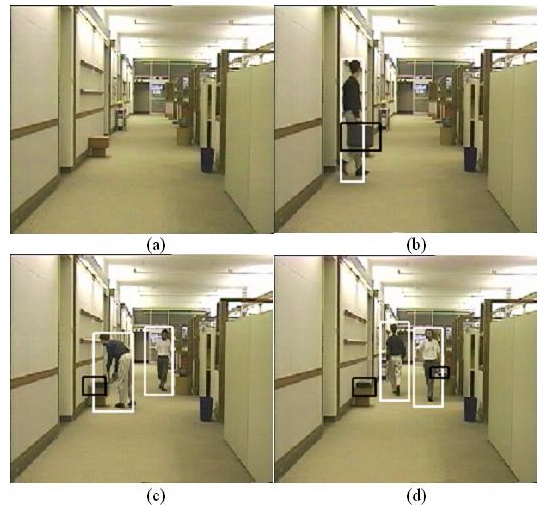

Figure 2 shows 4 snapshots (time order from left to right, top to bottom) from a video

sequences. Suppose the semi-semantic analysis can classify objects into two classes:

human and non-human and indexed them accordingly. Figure 2 shows the moving objects

that would be indexed during the encoding process (moving human in white bounding

boxes and moving non-human objects in black bounding boxes).

|

|

Figure 2: Moving humans (in white bounding boxes) and moving objects (in black

bounding boxes) extracted from an example video sequence.

|

Now suppose a user want to retrieve a video segment in which a man dropped his

briefcase. The conceptual query translation module will first translate it to a

semi-semantic description for retrieval purpose. In this particular example, it could well

be translated into the query which retrieves all video segments with moving human and

non-human objects. Then among all the video segments containing the four snapshots in

Figure 2, only those which contain Figure 2(b)-(d) would be retrieved. Note that in

surveillance type of video, most of the videos are similar to that in Figure 2(a). That

means the semi-semantic approach in retrieval is usually good enough in isolating out the

related video segments.

From this example, it can also be seen that the proposed system can be easily extended to

a full-scale semantic video retrieval system. If full-scale semantic tools are available, the

extracted videos from the semi-semantic retrieval could be passed to the full-scale

semantic analysis process for further analysis. In this particular example, the full-scale

semantic analysis process could check for each detected non-human objects to see if it is

a briefcase or not. As such, only the video segments which contain Figure 2(b) and 2(c)

will satisfy this criterion. It may also verify that the action involved satisfy the user query

(i.e. The man dropped the briefcase). Together with this constraint, only the video

segment containing the snapshot Figure 2(c) would be retrieved. There is no doubt that

this full-scale semantic processing involves more sophisticated image/video processing

techniques and should be a more time consuming process when compared with the

semi-semantic analysis. However, under our proposed architecture, the full-scale

semantic processing has to be performed only on those regions of interest (e.g. the

regions within the bounding boxes in Figure 2) instead of the whole video frame. Besides,

since the semi-semantic retrieval process should have removed most of the irrelevant

video segments, it is expected that the proposed system can greatly improve the speed

and accuracy of the retrieval tasks, resulting in a robust, efficient and extensible video

retrieval systems.

|

Back to top

|