ME-PCN: Point Completion Conditioned on Mask Emptiness

Bingchen Gong, Yinyu Nie, Yiqun Lin, Xiaoguang Han, and Yizhou YuInternational Conference on Computer Vision (ICCV) 2021,

Point completion refers to completing the missing geometries of an object from incomplete observations. Mainstream methods predict the missing shapes by decoding a global feature learned from the input point cloud, which often leads to deficient results in preserving topology consistency and surface details. In this work, we present MEPCN, a point completion network that leverages emptiness in 3D shape space. Given a single depth scan, previous methods often encode the occupied partial shapes while ignoring the empty regions (e.g. holes) in depth maps. In contrast, we argue that these ¡®emptiness¡¯ clues indicate shape boundaries that can be used to improve topology representation and detail granularity on surfaces. Specifically, our ME-PCN encodes both the occupied point cloud and the neighboring ¡®empty points¡¯. It estimates coarse-grained but complete and reasonable surface points in the first stage, followed by a refinement stage to produce fine-grained surface details. Comprehensive experiments verify that our ME-PCN presents better qualitative and quantitative performance against the state of the art. Besides, we further prove that our ¡®emptiness¡¯ design is lightweight and easy to embed in existing methods, which shows consistent effectiveness in improving the CD and EMD scores.

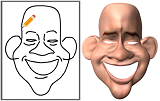

DeepSketch2Face: A Deep Learning Based Sketching System for 3D Face and Caricature Modeling

Xiaoguang Han, Chang Gao, and Yizhou YuSIGGRAPH 2017, (PDF, Suppl) [BibTex]

Face modeling has been paid much attention in the field of visual computing. There exist many scenarios, including cartoon characters, avatars for social media, 3D face caricatures as well as face-related art and design, where low-cost interactive face modeling is a popular approach especially among amateur users. In this paper, we propose a deep learning based sketching system for 3D face and caricature modeling. This system has a labor-efficient sketching interface, that allows the user to draw freehand imprecise yet expressive 2D lines representing the contours of facial features. A novel CNN based deep regression network is designed for inferring 3D face models from 2D sketches. Our network fuses both CNN and shape based features of the input sketch, and has two independent branches of fully connected layers generating independent subsets of coefficients for a bilinear face representation. Our system also supports gesture based interactions for users to further manipulate initial face models. Both user studies and numerical results indicate that our sketching system can help users create face models quickly and effectively. A significantly expanded face database with diverse identities, expressions and levels of exaggeration is constructed to promote further research and evaluation of face modeling techniques.

High-Resolution Shape Completion Using Deep Neural Networks for Global Structure and Local Geometry Inference

Xiaoguang Han*, Zhen Li*, Haibin Huang, Evangelos Kalogerakis, and Yizhou YuInternational Conference on Computer Vision (ICCV) 2017, (PDF, Suppl) [BibTex]

We propose a data-driven method for recovering missing parts of 3D shapes. Our method is based on a new deep learning architecture consisting of two sub-networks: a global structure inference network and a local geometry refinement network. The global structure inference network incorporates a long short-term memorized context fusion module (LSTM-CF) that infers the global structure of the shape based on multi-view depth information provided as part of the input. It also includes a 3D fully convolutional (3DFCN) module that further enriches the global structure representation according to volumetric information in the input. Under the guidance of the global structure network, the local geometry refinement network takes as input local 3D patches around missing regions, and progressively produces a high-resolution, complete surface through a volumetric encoder-decoder architecture. Our method jointly trains the global structure inference and local geometry refinement networks in an end-to-end manner. We perform qualitative and quantitative evaluations on six object categories, demonstrating that our method outperforms existing state-of-the-art work on shape completion.

Fast and Exact Discrete Geodesic Computation Based on Triangle-Oriented Wavefront Propagation

Yipeng Qin, Xiaoguang Han, Hongchuan Yu, Yizhou Yu, and Jianjun ZhangSIGGRAPH 2016, (PDF, Suppl)

Computing discrete geodesic distance over triangle meshes is one of the fundamental problems in computational geometry and computer graphics. In this problem, an effective window pruning strategy can significantly affect the actual running time. Due to its importance, we conduct an in-depth study of window pruning operations in this paper, and produce an exhaustive list of scenarios where one window can make another window partially or completely redundant. To identify a maximal number of redundant windows using such pairwise cross checking, we propose a set of procedures to synchronize local window propagation within the same triangle by simultaneously propagating a collection of windows from one triangle edge to its two opposite edges. On the basis of such synchronized window propagation, we design a new geodesic computation algorithm based on a triangle-oriented region growing scheme. Our geodesic algorithm can remove most of the redundant windows at the earliest possible stage, thus significantly reducing computational cost and memory usage at later stages. In addition, by adopting triangles instead of windows as the primitive in propagation management, our algorithm significantly cuts down the data management overhead. As a result, it runs 4-15 times faster than MMP and ICH algorithms, 2-4 times faster than FWP-MMP and FWP-CH algorithms, and also incurs the least memory usage.

A Fast Modal Space Transform for Robust Nonrigid Shape Retrieval

Jianbo Ye and Yizhou YuThe Visual Computer, Vol 32, No 5, 2016, PDF

A short version of this paper appears in ACM International Conference on Multimedia Retrieval 2013.

Nonrigid or deformable 3D objects are common in many application domains. Retrieval of such objects in large databases based on shape similarity is still a challenging problem. In this paper, we take advantages of functional operators as characterizations of shape deformation, and further propose a framework to design novel shape signatures for encoding nonrigid geometries. Our approach constructs a context-aware integral kernel operator on a manifold, then applies modal analysis to map this operator into a low-frequency functional representation, called fast functional transform, and finally computes its spectrum as the shape signature. In a nutshell, our method is fast, isometry-invariant, discriminative, smooth and numerically stable with respect to multiple types of perturbations. Experimental results demonstrate that our new shape signature for nonrigid objects can outperform all methods participating in the nonrigid track of the SHREC'11 contest. It is also the second best performing method in the real human model track of SHREC'14.

Detail-Preserving Controllable Deformation from Sparse Examples

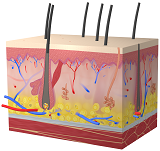

Haoda Huang, KangKang Yin, Ling Zhao, Yue Qi, Yizhou Yu, and Xin TongIEEE Transactions on Visualization and Computer Graphics, Vol 18, No 8, 2012, PDF

Recent advances in laser scanning technology have made it possible to faithfully scan a real object with tiny geometric details, such as pores and wrinkles. However, a faithful digital model should not only capture static details of the real counterpart but also be able to reproduce the deformed versions of such details. In this paper, we develop a data-driven model that has two components; the first accommodates smooth large-scale deformations and the second captures high-resolution details. Large-scale deformations are based on a nonlinear mapping between sparse control points and bone transformations. A global mapping, however, would fail to synthesize realistic geometries from sparse examples, for highly deformable models with a large range of motion. The key is to train a collection of mappings defined over regions locally in both the geometry and the pose space. Deformable fine-scale details are generated from a second nonlinear mapping between the control points and per-vertex displacements. We apply our modeling scheme to scanned human hand models, scanned face models, face models reconstructed from multiview video sequences, and manually constructed dinosaur models. Experiments show that our deformation models, learned from extremely sparse training data, are effective and robust in synthesizing highly deformable models with rich fine features, for keyframe animation as well as performance-driven animation. We also compare our results with those obtained by alternative techniques.

Object-Space Multiphase Implicit Functions

Zhan Yuan, Yizhou Yu, and Wenping WangSIGGRAPH 2012, PDF

Implicit functions have a wide range of applications in entertainment, engineering and medical imaging. A standard two-phase implicit function only represents the interior and exterior of a single object. To facilitate solid modeling of heterogeneous objects with multiple internal regions, object-space multiphase implicit functions are much desired. Multiphase implicit functions have much potential in modeling natural organisms, heterogeneous mechanical parts and anatomical atlases. In this paper, we introduce a novel class of object-space multiphase implicit functions that are capable of accurately and compactly representing objects with multiple internal regions. Our proposed multiphase implicit functions facilitate true object-space geometric modeling of heterogeneous objects with non-manifold features. We present multiple methods to create object-space multiphase implicit functions from existing data, including meshes and segmented medical images. Our algorithms are inspired by machine learning algorithms for training multicategory max-margin classifiers. Comparisons demonstrate that our method achieves an error rate one order of magnitude smaller than alternative techniques.

Multiscale Vector Volumes

Lvdi Wang, Yizhou Yu, Kun Zhou, and Baining GuoSIGGRAPH Asia 2011, PDF

We introduce multiscale vector volumes, a compact vector representation for volumetric objects with complex internal structures spanning a wide range of scales. With our representation, an object is decomposed into components and each component is modeled as an SDF tree, a novel data structure that uses multiple signed distance functions (SDFs) to further decompose the volumetric component into regions. Multiple signed distance functions collectively can represent non-manifold surfaces and deliver a powerful vector representation for complex volumetric features. We use multiscale embedding to combine object components at different scales into one complex volumetric object. As a result, regions with dramatically different scales and complexities can co-exist in an object. To facilitate volumetric object authoring and editing, we have also developed a scripting language and a GUI prototype. With the help of a recursively defined spatial indexing structure, our vector representation supports fast random access, and arbitrary cross sections of complex volumetric objects can be visualized in real time.

A Deformation Transformer for Real-Time Cloth Animation

Wei-Wen Feng, Yizhou Yu, and Byung-Uck KimSIGGRAPH 2010, [BibTex], (PDF, datasets)

Achieving interactive performance in cloth animation has significant implications in computer games and other interactive graphics applications. Although much progress has been made, it is still much desired to have real-time high-quality results that well preserve dynamic folds and wrinkles. In this paper, we introduce a hybrid method for real-time cloth animation. It relies on data-driven models to capture the relationship between cloth deformations at two resolutions. Such data-driven models are responsible for transforming low-quality simulated deformations at the low resolution into high-resolution cloth deformations with dynamically introduced fine details. Our data-driven transformation is trained using rotation invariant quantities extracted from the cloth models, and is independent of the simulation technique chosen for the lower resolution model. We have also developed a fast collision detection and handling scheme based on dynamically transformed bounding volumes. All the components in our algorithm can be efficiently implemented on programmable graphics hardware to achieve an overall real-time performance on high-resolution cloth models.

Feature-Preserving Triangular Geometry Images for Level-of-Detail Representation of Static and Skinned Meshes

Wei-Wen Feng, Byung-Uck Kim, Yizhou Yu, Liang Peng, and John HartACM Transactions on Graphics, 2010, PDF

Geometry images resample meshes to represent them as texture for efficient GPU processing by forcing a regular parameterization that often incurs a large amount of distortion. Previous approaches broke the geometry image into multiple rectangular or irregular charts to reduce distortion, but complicated the automatic level of detail one gets from MIP-maps of the geometry image.

We introduce triangular-chart geometry images and show this new approach better supports the GPU-side representation and display of skinned dynamic meshes, with support for feature preservation, bounding volumes and view-dependent level of detail. Triangular charts pack efficiently, simplify the elimination of T-junctions, arise naturally from an edge-collapse simplification base mesh, and layout more flexibly to allow their edges to follow curvilinear mesh features. To support the construction and application of triangular-chart geometry images, this paper introduces a new spectral clustering method for feature detection, and new methods for incorporating skinning weights and skinned bounding boxes into the representation. This results in a ten-fold improvement in fidelity when compared to quadchart geometry images.

Robust Feature-Preserving Mesh Denoising Based on Consistent Sub-Neighborhoods

Hanqi Fan, Yizhou Yu, and Qunsheng PengIEEE Transactions on Visualization and Computer Graphics, Vol 16, No 2, 2010, [BibTex], PDF

In this paper, we introduce a feature-preserving denoising algorithm. It is built on the premise that the underlying surface of a noisy mesh is piecewise smooth, and a sharp feature lies on the intersection of multiple smooth surface regions. A vertex close to a sharp feature is likely to have a neighborhood that includes distinct smooth segments. By defining the consistent sub-neighborhood as the segment whose geometry and normal orientation most consistent with those of the vertex, we can completely remove the influence from neighbors lying on other segments during denoising. Our method identifies piecewise smooth sub-neighborhoods using a robust density-based clustering algorithm based on shared nearest neighbors.

In our method, we obtain an initial estimate of vertex normals and curvature tensors by robustly fitting a local quadric model. An anisotropic filter based on optimal estimation theory is further applied to smooth the normal field and the curvature tensor field. This is followed by second-order bilateral filtering, which better preserves curvature details and alleviates volume shrinkage during denoising. The support of these filters is defined by the consistent sub-neighborhood of a vertex. We have applied this algorithm to both generic and CAD models, and sharp features, such as edges and corners, are very well preserved.

Real-Time Data-Driven Deformation Using Kernel Canonical Correlation Analysis

Wei-Wen Feng, Byung-Uck Kim, and Yizhou YuSIGGRAPH 2008, [BibTex], PDF

Achieving intuitive control of animated surface deformation while observing a specific style is an important but challenging task in computer graphics. Solutions to this task can find many applications in data-driven skin animation, computer puppetry, and computer games. In this paper, we present an intuitive and powerful animation interface to simultaneously control the deformation of a large number of local regions on a deformable surface with a minimal number of control points. Our method learns suitable deformation subspaces from training examples, and generate new deformations on the fly according to the movements of the control points. Our contributions include a novel deformation regression method based on kernel Canonical Correlation Analysis (CCA) and a Poisson-based translation solving technique for easy and fast deformation control based on examples. Our run-time algorithm can be implemented on GPUs and can achieve a few hundred frames per second even for large datasets with hundreds of training examples.

Gradient Domain Editing of Deforming Mesh Sequences

Weiwei Xu, Kun Zhou, Yizhou Yu, Qifeng Tan, Qunsheng Peng, and Baining GuoSIGGRAPH 2007, PDF

Many graphics applications, including computer games and 3D animated films, make heavy use of deforming mesh sequences. In this paper, we generalize gradient domain editing to deforming mesh sequences. Our framework is keyframe based. Given sparse and irregularly distributed constraints at unevenly spaced keyframes, our solution first adjusts the meshes at the keyframes to satisfy these constraints, and then smoothly propagate the constraints and deformations at keyframes to the whole sequence to generate new deforming mesh sequence. To achieve convenient keyframe editing, we have developed an efficient alternating least-squares method. It harnesses the power of subspace deformation and two-pass linear methods to achieve high-quality deformations. We have also developed an effective algorithm to define boundary conditions for all frames using handle trajectory editing. Our deforming mesh editing framework has been successfully applied to a number of editing scenarios with increasing complexity, including footprint editing, path editing, temporal filtering, handle-based deformation mixing, and spacetime morphing.

A Fast Multigrid Algorithm for Mesh Deformation

Lin Shi, Yizhou Yu, Nathan Bell, and Wei-Wen FengSIGGRAPH 2006, PDF

In this paper, we present a multigrid technique for efficiently deforming large surface and volume meshes. We show that a previous least-squares formulation for distortion minimization reduces to a Laplacian system on a general graph structure for which we derive an analytic expression. We then describe an efficient multigrid algorithm for solving the relevant equations. Here we develop novel prolongation and restriction operators used in the multigrid cycles. Combined with a simple but effective graph coarsening strategy, our algorithm can outperform other multigrid solvers and the factorization stage of direct solvers in both time and memory costs for large meshes. It is demonstrated that our solver can trade off accuracy for speed to achieve greater interactivity, which is attractive for manipulating large meshes. Our multigrid solver is particularly well suited for a mesh editing environment which does not permit extensive precomputation. Experimental evidence of these advantages is provided on a number of meshes with a wide range of size. With our mesh deformation solver, we also successfully demonstrate that visually appealing mesh animations can be generated from both motion capture data and a single base mesh even when they are inconsistent.

Mesh Editing with Poisson-Based Gradient Field Manipulation

Yizhou Yu, Kun Zhou, Dong Xu, Xiaohan Shi, Hujun Bao, Baining Guo, and Harry ShumSIGGRAPH 2004, PDF

In this paper, we introduce a novel approach to mesh editing with the Poisson equation as the theoretical foundation. The most distinctive feature of this approach is that it modifies the original mesh geometry implicitly through gradient field manipulation. Our approach can produce desirable and pleasing results for both global and local editing operations, such as deformation, object merging, and smoothing. With the help from a few novel interactive tools, these operations can be performed conveniently with a small amount of user interaction. Our technique has three key components, a basic mesh solver based on the Poisson equation, a gradient field manipulation scheme using local transforms, and a generalized boundary condition representation based on local frames. Experimental results indicate that our framework can outperform previous related mesh editing techniques.

Controllable Smoke Animation with Guiding Objects

Lin Shi and Yizhou YuACM Transactions on Graphics, Vol 24, No 1, 2005, PDF

This article addresses the problem of controlling the density and dynamics of smoke (a gas phenomenon) so that the synthetic appearance of the smoke (gas) resembles a still or moving object. Both the smoke region and the target object are represented as implicit functions. As a part of the target implicit function, a shape transformation is generated between an initial smoke region and the target object. In order to match the smoke surface with the target surface, we impose carefully designed velocity constraints on the smoke boundary during a dynamic fluid simulation. The velocity constraints are derived from an iterative functional minimization procedure for shape matching. The dynamics of the smoke is formulated using a novel compressible fluid model which can effectively absorb the discontinuities in the velocity field caused by imposed velocity constraints while reproducing realistic smoke appearances. As a result, a smoke region can evolve into a regular object and follow the motion of the object, while maintaining its smoke appearance.

Extracting Objects from Range and Radiance Images

Yizhou Yu, Andras Frencz and Jitendra MalikIEEE Transactions on Visualization and Computer Graphics, Vol 7, No 4, 2001, PDF

In this project, we developed two key techniques necessary for editing a real scene captured with both cameras and laser range scanners. We develop automatic algorithms to segment the geometry from range images into distinct surfaces, and register texture from radiance images with the geometry. The result is an object-level representation of the scene which can be rendered with modifications to structure via traditional rendering methods.

The algorithms have been applied to large-scale real data. We can reconstruct meshes and texture maps for individual objects and render them realistically. We demonstrate our ability to edit a captured scene by moving, inserting, and deleting objects.

Surface Reconstruction from Unorganized Points Using Neural Networks

Yizhou YuIEEE Visualization 1999, PDF

We introduce a novel technique for surface reconstruction from unorganized points by applying Kohonen's self-organizing map. The topology of the surface is predetermined, and a neural network learning algorithm is carried out to obtain correct 3D coordinates at each vertex of the surface. Edge swap and multiresolution learning are proposed to make the algorithm more effective and more efficient. The whole algorithm is very simple to implement. Experimental results have shown our techniques are successful.