Project Objective

Breakthroughs in the fields of deep learning and mobile processor chips are radically changing the way we use our smartphones. However, there are few studies of optimizing mobile deep learning frameworks for inference speed and memory reduction, which prohibits further usage of deep learning on mobile platforms. In this work, we presented the design and implementation of MobileDL, a toolkit that is exclusively dedicated to mobile devices. MobileDL significantly accelerated the inference stage of convolution neural network with the help of three novel methodologies: (1) Convolutional Neural Network Compression; (2) Zero-Copy and (3), Half Precision Computation Supporting. Among all these optimization method, Convolutional Neural Network Compression is the core part of the whole project, which includes two compression methods named Channels-Oriented K-Means Clustering Compression and Mask-Based Neural Network Recovery. Experiments on several famous benchmarks demonstrated about 3 times speed-up with merely 1% loss of classification accuracy. Additionally, MobileDL also achieves significant memory usage reduction, which is critical to mobile and embedding devices. With MobileDL, more innovative and fascinating mobile applications will be turned into reality.

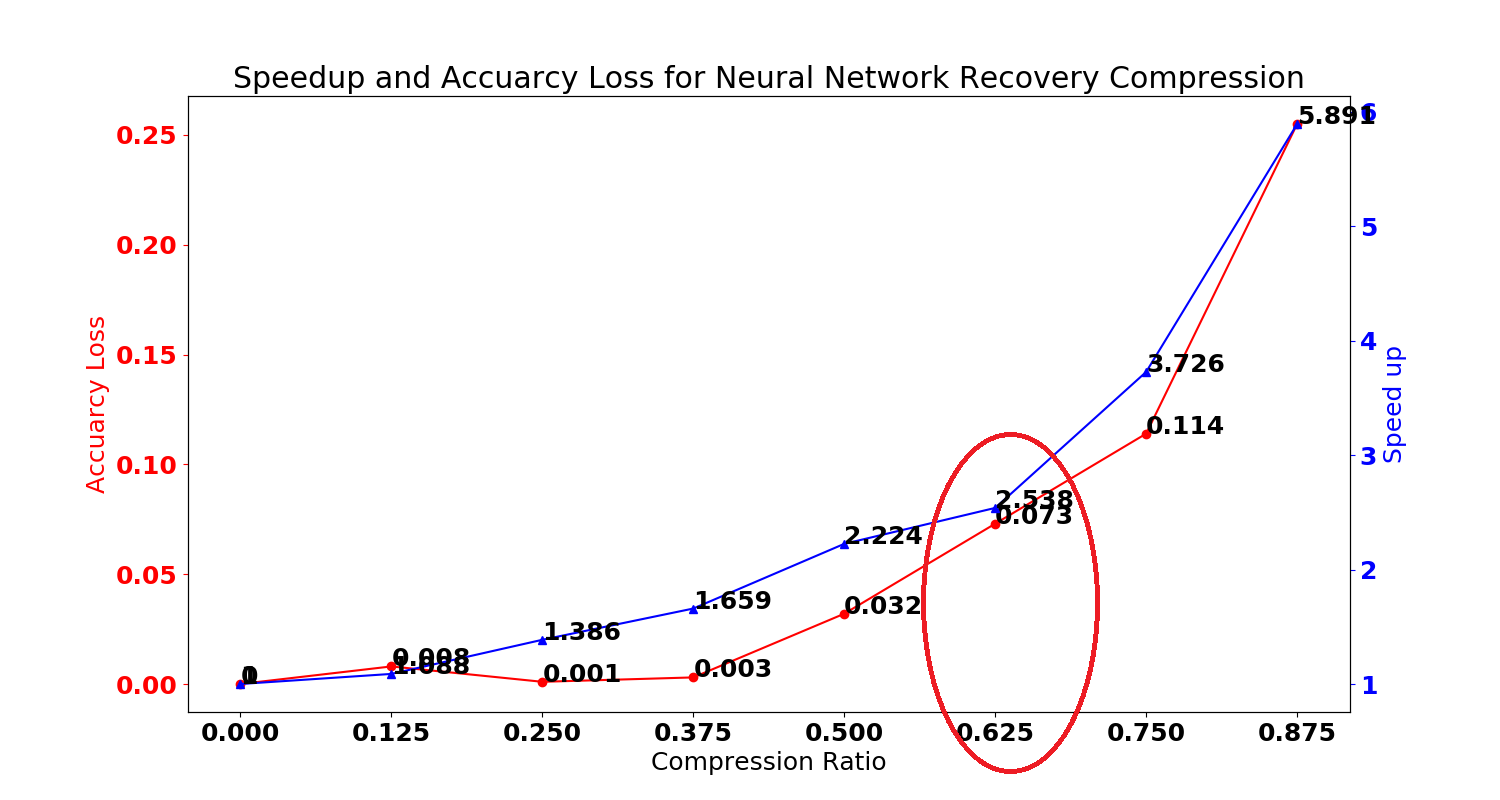

Mask-Based Neural Network Recovery Compression

On the other hand, Mask-Based Neural Network Recovery Compression performs better on both AlexNet and LeNet. It can be seen from the figure that, even for a compression ratio of 67.5%, the accuracy loss is mere 7.2%.

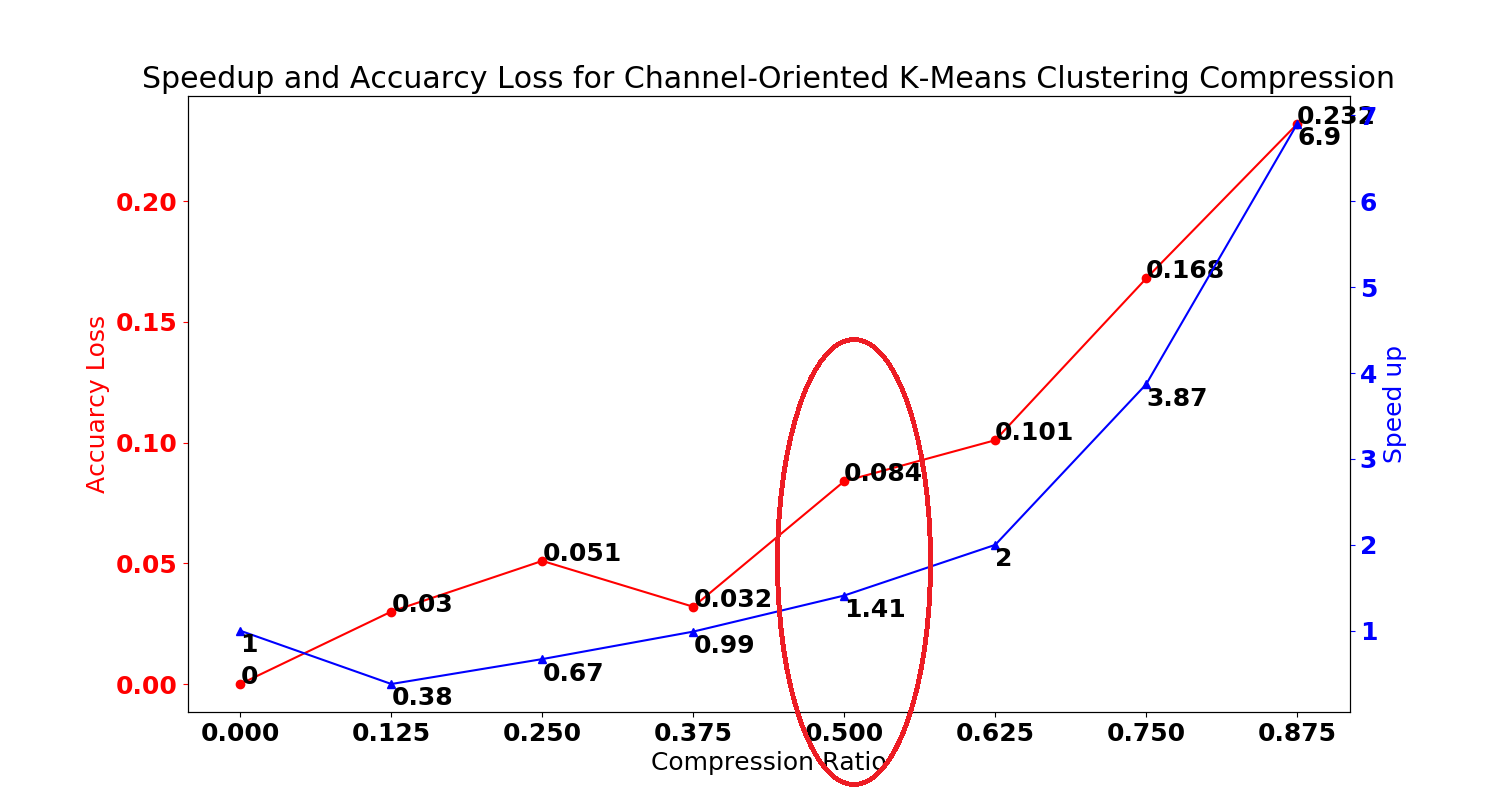

Channels-Oriented K-Means Clustering Compression

For Channels-Oriented K-Means Clustering, a compression rate of 50% will cause about 8.5% classification error. in other words, statistically, for 100 mis-classifications of the un-compressed neural network, there will be 108 images be classified incorrectly.

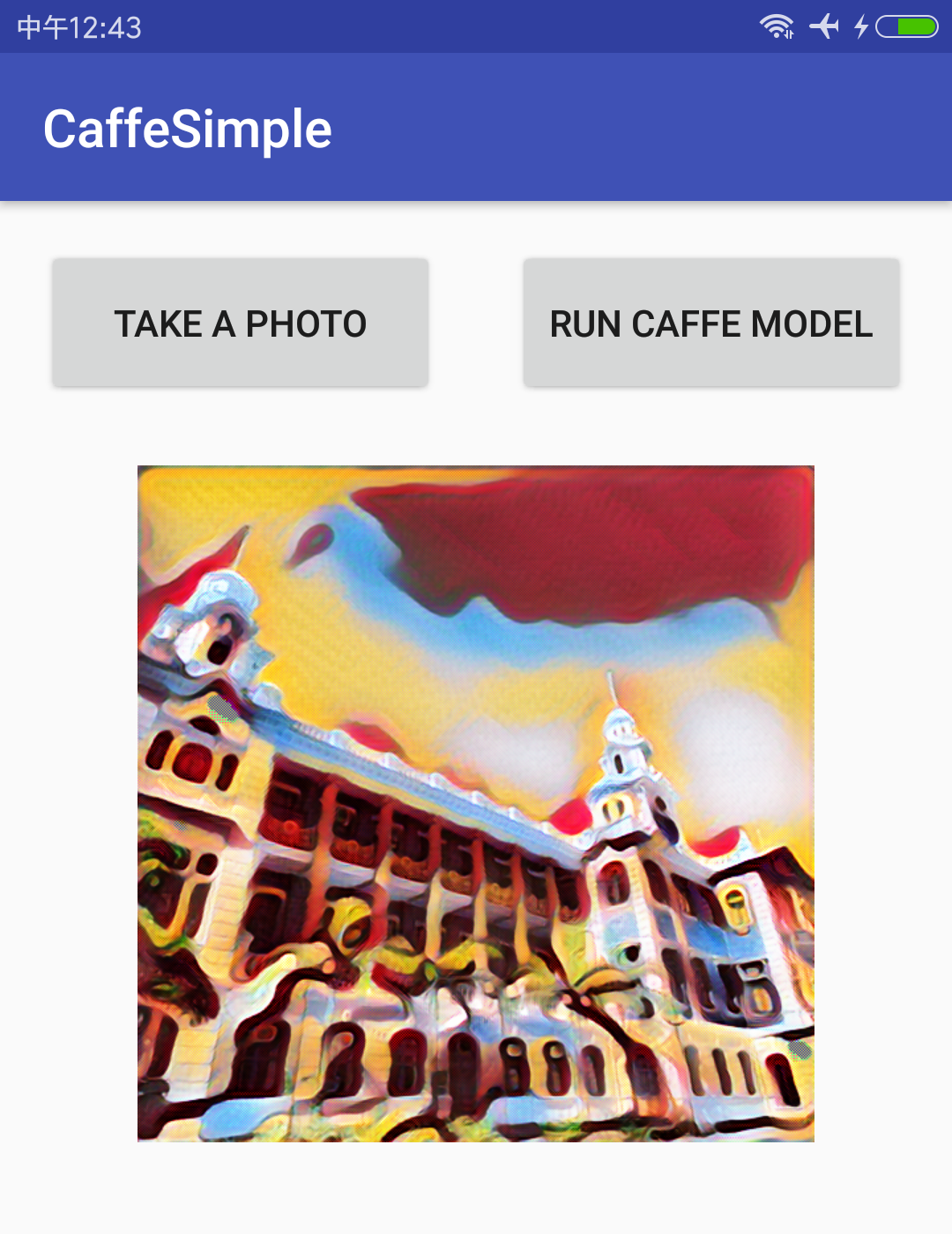

Art Style Transfer

Recent years, entertainment usages of deep learning technologies have become more and more popular. Among these entertainment applications, style transfer is possibly the most difficult one. Style transfer is about transferring photos to another art styles by separating and recombining the content of the original photos and the art style of some paintings. Different with image processing technologies, which applies simple filters or transformation matrices to change the color or the shape of original images, neural network can extract the art style of the drawing and understand the contents of the photo. After that, the neural network will redraw the photo, which means that the transferred photos may look extremely different with the original ones.

We have ported this fascinating application from desktops to mobile devices, which is shown in the left figure. For such a large neural network, even for desktop CPUs (Intel i7 4790), the inference time is about 4.7 seconds. Without neural network compression, the inference time on XiaoMi 6, which is equipped with Snapdragon 835 and 6 GB LPDDR4 main memory, is about 8 seconds.

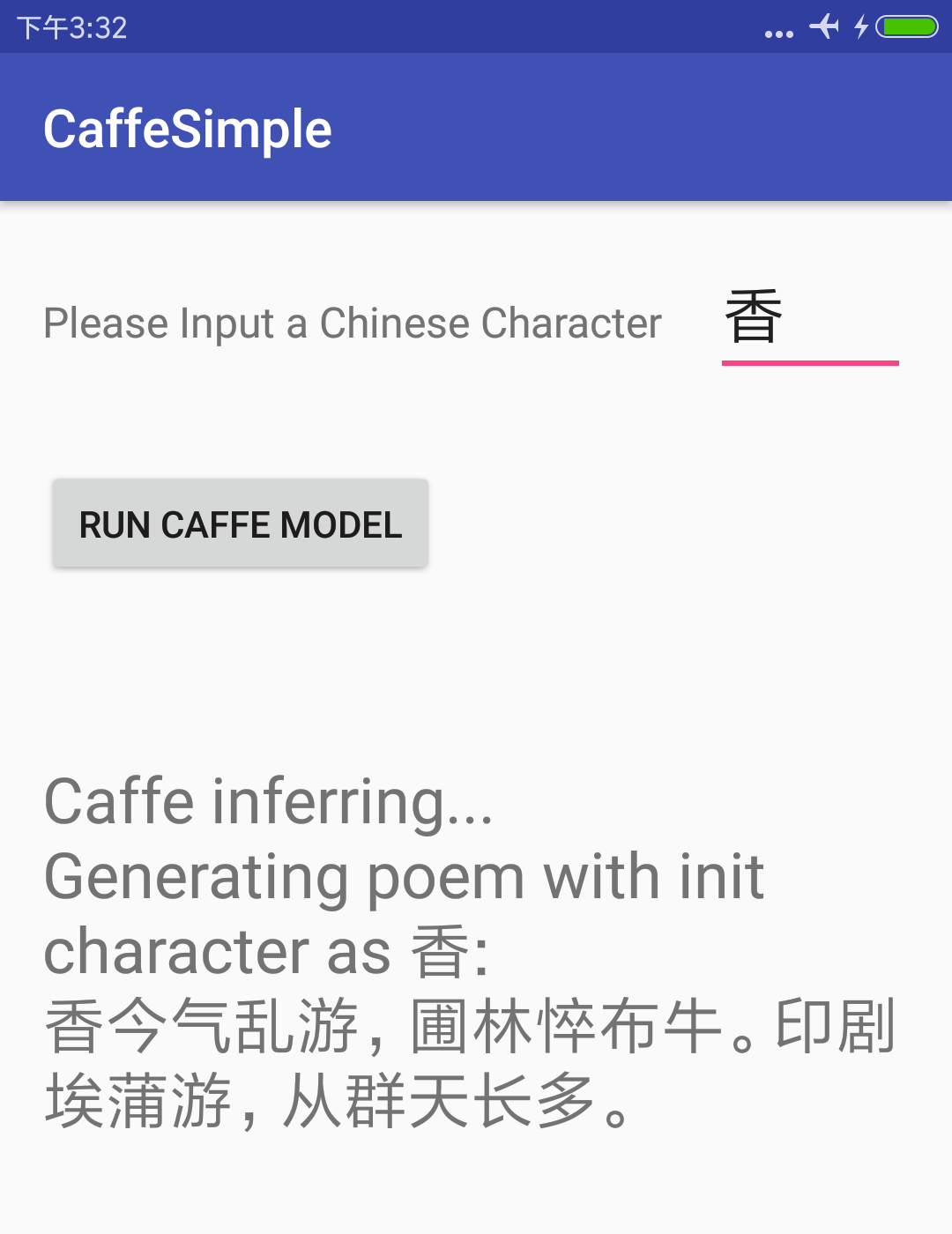

Tang Poems Generator

Chinese classical poetry is undoubtedly one of the most beautiful cultural heritage even all over the world, which significantly influences the Chinese people and culture, especially for the ancient time. There are several researches about generating Chinese poems automatically, which start from 1960s. However, most of these early attempts use statistic methods, by which it is difficult to generate meaningful sentences.

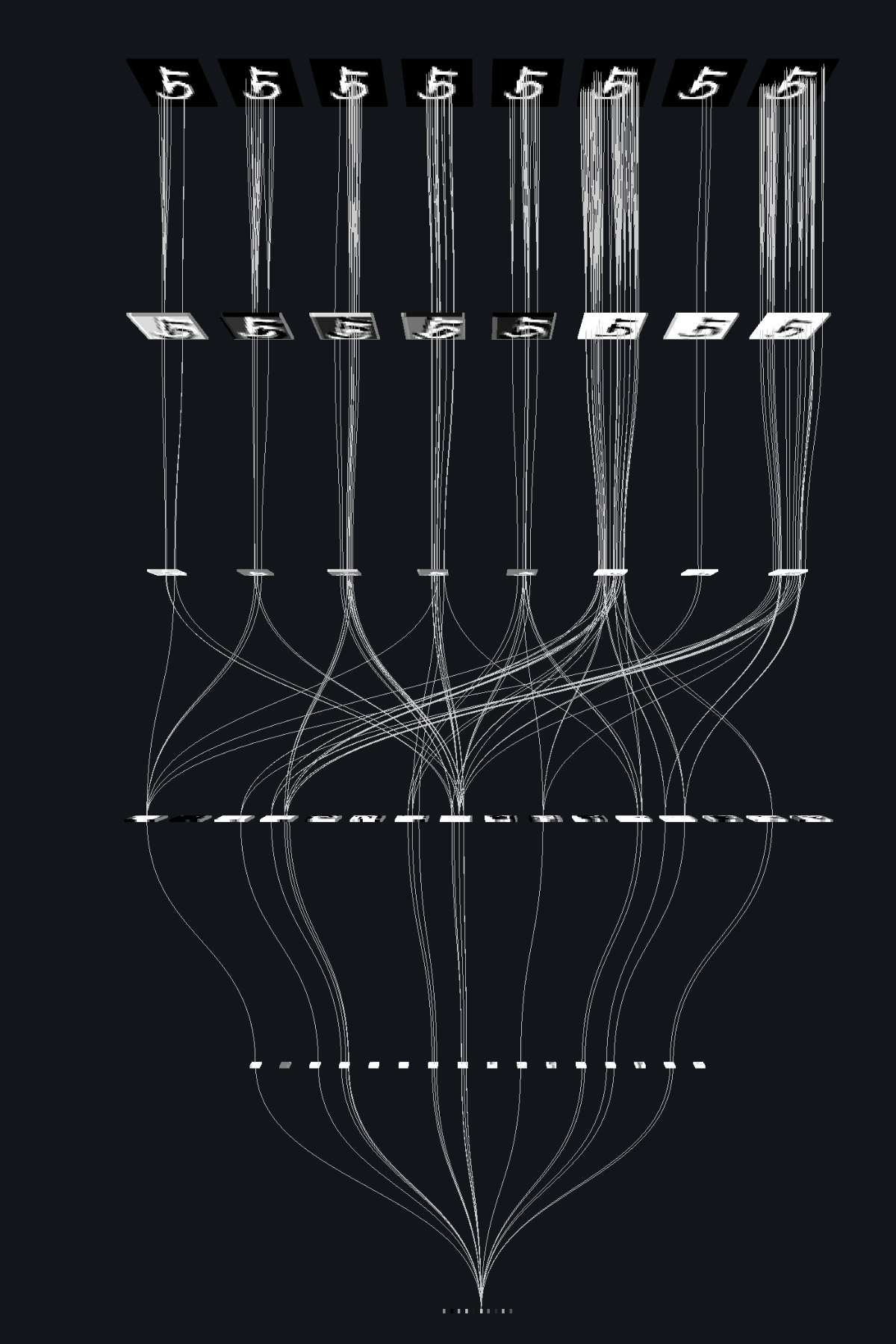

With the development of deep learning technology, natural language processing has also gain impressive improvements. A recent study applied a famous deep neural network, named recurrent neural network, to Chinese poems generator. As a typical natural language processing application, Chinese poems generator is also ported to mobile platforms by us. This generator generates Chinese poem automatically by a initial character, except the deep learning model and thew initial character, there is no other information provided to the application. As the core operations of recurrent neural network are matrix multiplications, which can be significantly speedup by our optimization.

Contact Us

Prof. Wang

Tel: 2857 8458

Email: clwang(@)cs.hku.hk

JI ZHUORAN

Tel: 6675 6776

Email: jizr@connect.hku.hk