|

3D Human Model Reconstruction from Sparse Uncalibrated Views

IEEE Computer Graphics and Applications

Xiaoguang Han, Kenneth K.Y. Wong, Yizhou Yu

The University of Hong Kong

|

|

|

|

Abstract

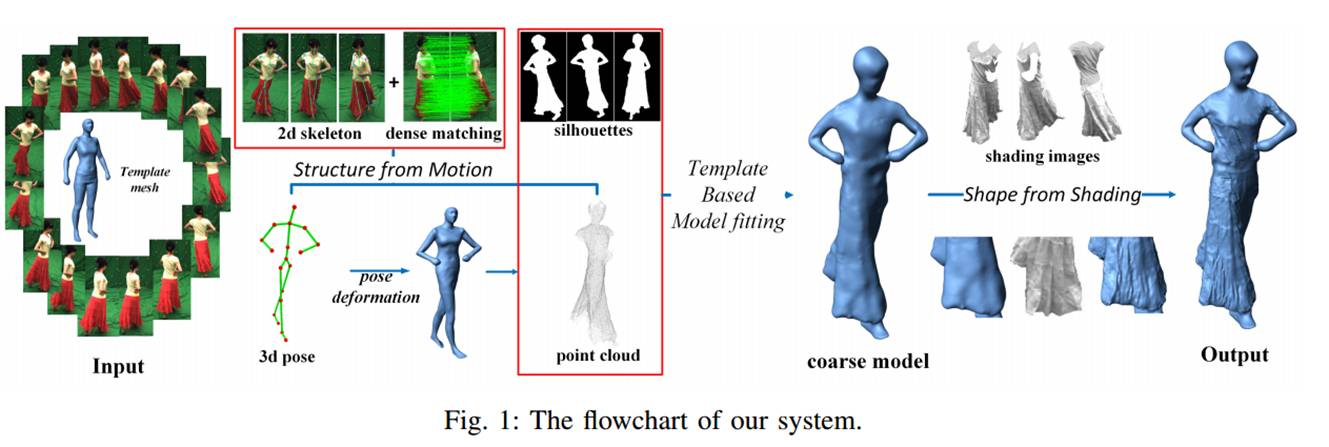

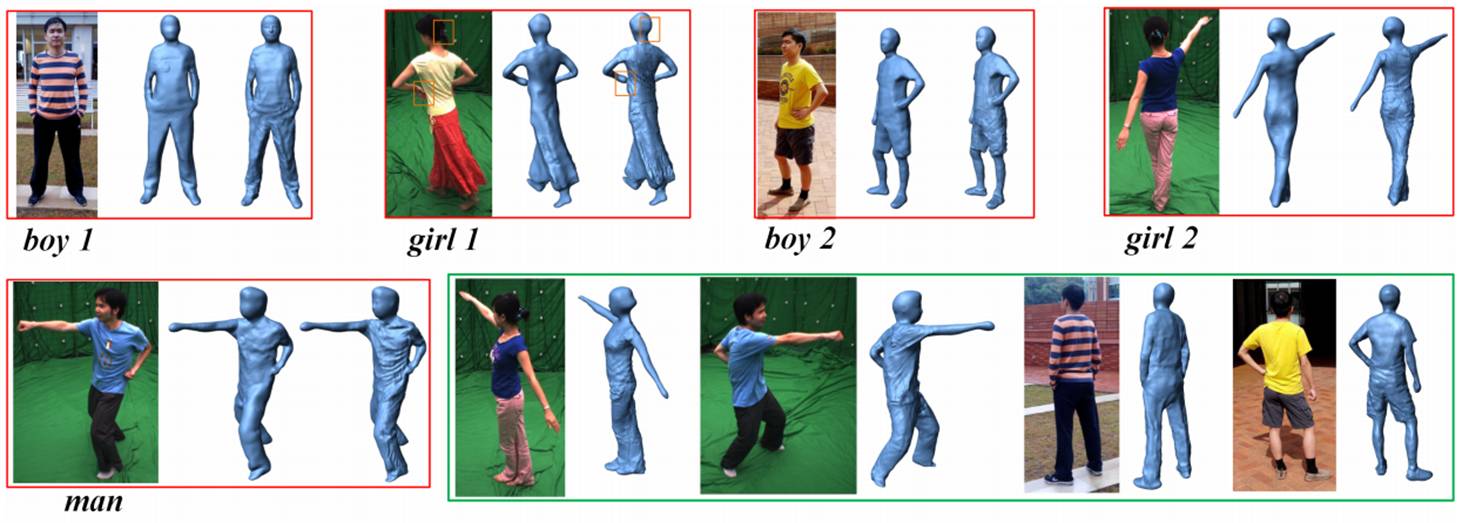

This paper presents a novel two-stage algorithm for reconstructing 3D human models wearing regular clothes from sparse uncalibrated views. The first stage reconstructs a coarse model with the help of a template model for human figures. A non-rigid dense correspondence algorithm is applied to generate denser correspondences than traditional feature descriptors. We fit the template model to the point cloud reconstructed from dense correspondences while enclosing it with the visual hull. In the second stage, the coarse model from the first stage is refined with geometric details, such as wrinkles, reconstructed from shading information. To success-fully extract shading information for a surface with non-uniform reflectance, a hierarchical density based clustering algorithm is adapted to obtain high-quality pixel clusters. Geometric details reconstructed using our new shading extraction method exhibit superior quality. Our algorithm has been validated with images from an existing dataset and images captured by a cell phone camera.

|

|

Downloads

Paper, Supplemental material, Slides, Data

|

|

Results

|

|

Acknowledgements

We wish to thank the anonymous reviewers for their valuable comments. This work was partially supported by Hong Kong Research Grants Council under General Research Funds (HKU718712).

|

|

Bibtex

@ARTICLE{humanReconCGA:2015,

|